Part 1: The Evidence Against Charge Adjustment

Ben Lipscomb

Many High-Performance HVAC Today readers are at least familiar with utility energy efficiency programs that pay an incentive for adjusting refrigerant charge. These programs are typically designed to correct low or high refrigerant charges in residential and light commercial HVAC systems. Over the past two decades, many sources have pointed out the significant opportunity in correcting refrigerant charge.

The story goes that charge deficiencies are widespread in split systems and rooftop units. This equipment is nearly ubiquitous in both residential and light commercial buildings, creating a huge energy savings opportunity. The premise seems so simple and promising that literally hundreds of millions of dollars have been spent nationally over the past decade to pursue these energy savings. Here’s an unsettling question to ponder: What if we’ve been wrong all along?

Tough Questions, Mixed Results

Energy Measurement and Verification (EM&V) on Refrigerant Charge Adjustment (RCA) programs has produced mixed results over the past 10 years. Some studies found excellent savings, while others had trouble detecting savings at all. One study even indicated negative overall savings for comprehensive equipment maintenance that included RCA as one measure.

To understand what’s behind the highly variable results, it’s important to understand that EM&V impact evaluations can take several different approaches to answer the same question – how much savings did the program achieve?

To understand what’s behind the highly variable results, it’s important to understand that EM&V impact evaluations can take several different approaches to answer the same question – how much savings did the program achieve?

For RCA, EM&V faces a particularly difficult challenge. The typical savings claimed for RCA tend to be small compared to the energy use of the HVAC equipment, and are downright minuscule compared to the energy use of a whole building. This makes direct measurement of the savings very difficult and expensive, or simply impossible.

Further confounding the results, many RCA programs include a broader tune-up or maintenance program. This means there are many other measures that also impact energy use, so it’s easy to lose track of the performance of the RCA measure itself.

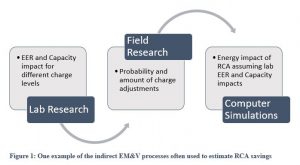

To address these challenges, some extremely indirect methods have been developed for EM&V. One approach is to use laboratory research data to establish the impact of varying levels of refrigerant charge deficiencies on equipment efficiency and capacity. This is then coupled with program data on refrigerant charge addition or removal amounts to estimate the efficiency and capacity impacts for each adjustment that the program made.

Finally, the efficiency and capacity impacts are simulated in a building energy simulation program like eQuest or Energy Plus to estimate the annual energy savings.

As you can imagine, there are so many inaccuracies and assumptions in this process that it’s difficult or impossible to even put a number on the overall uncertainty of RCA program savings. Nonetheless, a recent study conducted in California examines several aspects of these uncertainties and provides a starting point for understanding them.

Energy Measurement and Verification, as it exists today in most utility programs, often involves using laboratory research data to establish the impact of varying levels of refrigerant charge deficiencies on equipment efficiency and capacity. This is being shown as being overly complicated and not accurate.

The California Public Utilities Commission Study of Deemed HVAC Measures Uncertainty Year 3 Report (HVAC 4)1 strives to achieve a deeper understanding of the energy savings uncertainty of three types of HVAC measures, including RCA. The findings show that only correcting the charge on a highly undercharged system produces a high enough improvement in cooling efficiency or capacity to overcome the associated uncertainty around the improvements.

In other words, the energy savings benefits of doing RCA by itself are questionable except in the case of a highly undercharged system. Furthermore, addressing an overcharged system is likely to produce a negative benefit, with most scenarios showing a decrease in efficiency and capacity.

The HVAC4 study also looks at the uncertainty of RCA when non-RCA faults such as economizer failures are addressed, and the findings lend even less credibility to the idea that RCA itself produces any measurable benefit. In fact, any RCA benefits realized after addressing other faults were overshadowed by the uncertainty of the benefit.

Overall benefits produced by addressing non-RCA faults in addition to RCA faults can be traced primarily to the benefit that addressing non-RCA faults produces. A couple of conclusions within the study itself do a great job summing up the results:

- ‘Only units with egregious charge offsets and non-RCA faults are expected to be significantly impacted by adjusting the charge to factory levels.’

- ‘Addressing other non-RCA faults appears to be more beneficial than addressing the charge offset fault, itself.’

One final point to consider is that this study only partially examined the uncertainty of the RCA measure.

It didn’t consider the uncertainty of lab instrumentation, manufacturing variability, performance responses to varying outdoor and indoor environmental conditions, persistence of the RCA benefits, uncertainty in the simulations used to project energy savings, or the relative uncertainties of the various EM&V methods that have been used for RCA

If you consider the additional uncertainties inherent in this chain of dependencies, it’s easy to see why EM&V results have been all over the place.

Should RCA be an Energy Efficiency Measure?

With these conclusions in hand, let’s consider whether RCA should continue as a utility energy efficiency (EE) program measure. The first thing to think about is what percentage of systems out there are operating with egregious charge deficiencies.

In one study2 of 4,168 air conditioners that received refrigerant charge diagnostics and adjustments, approximately 18% of those received a charge addition of 20% or higher. With roughly 100 million residential and small commercial HVAC units in the United States, if significant improvements from RCA could be achieved on 18% of those it would still be very beneficial.

Note, however, that 18% received upward adjustments of 20% or higher. Did they need the adjustments in the first place? Did the adjustments produce a benefit?

Program Design Flaws

RCA programs typically pay out an incentive for making charge adjustments, which are assumed to produce savings. Some programs also pay a smaller incentive for diagnostics, which don’t produce savings directly, but in theory should lead to contractors finding and fixing more charge deficiencies.

What happens in practice is that some less scrupulous contractors make as many adjustments as they can, whether they are truly beneficial or not.

Why? Because the economics of the program drive them to adjust. Once you’re on the roof or in the home and have the gauges on, it’s easy to add or remove an ounce or two of refrigerant and claim the larger incentive.

Some programs leave the charge diagnostics entirely to the contractor, requiring them to make a judgement about whether to add or remove charge, potentially based only on limited training and understanding of the refrigeration cycle.

Verification on these programs typically happens only after the fact, so if the system is running alright after the RCA measure is claimed, there’s no repercussion to the contractor for incorrectly interpreting a charge fault or making an adjustment. Refrigeration cycle diagnostics are complex, and many different types of issues exist that can easily confuse a technician, leading to frequent misdiagnoses and unnecessary charge adjustments.

Is More Data the Answer?

Some programs prescribe a specific diagnostic approach, or even require a computerized refrigerant manifold with embedded fault detection and diagnostics (FDD) to collect data and identify issues. What if, using FDD, a program could be developed that could effectively target only cases of egregious undercharge, therefore providing a high likelihood that significant measurable savings materialize?

Some programs prescribe a specific diagnostic approach, or even require a computerized refrigerant manifold with embedded fault detection and diagnostics (FDD) to collect data and identify issues. What if, using FDD, a program could be developed that could effectively target only cases of egregious undercharge, therefore providing a high likelihood that significant measurable savings materialize?

There are several major issues with this idea, summarized below.

- Symptoms of highly undercharged systems are more likely to be noticed by occupants in the form of low cooling capacity causing a comfort issues or high utility bills. Therefore, they are also more likely to get fixed outside the influence of a utility program. This diminishes the amount of savings attributable to the program, and/or shortens the measure life that can reasonably be claimed.

- There’s a serious environmental issue with incentivizing charge adjustments on systems with very low charge. A very low charge indicates a significant leak in a system that is designed to operate as a sealed system. If refrigerant is merely added to the system, it will continue to leak, making this a temporary fix at best.

Worse than that, the leak vents refrigerant into the atmosphere. The most common refrigerants in use in residential and commercial air conditioners are R-22 and R-410a. R-22 is an ozone-depleting substance, and both R-22 and R-410a are greenhouse gasses with a Global Warming Potential thousands of times that of carbon dioxide.

- Properly dealing with a leak is an expensive and time-consuming process, the cost of which cannot be justified by energy savings alone. Leaks can be very difficult to find. It takes experience, and in some cases a significant amount of time.

After finding the leak, the process for fixing it typically includes recovering all refrigerant in the system, fixing the leak or replacing the leaking component (e.g. evaporator, condenser, compressor, etc.).

The system then needs pressurizing with nitrogen and/or drawing a vacuum on the system to test for leaks and eliminate moisture, and finally, re-charging the system. This whole process typically takes from one half to one full day.

- Current FDD approaches produce flawed diagnostics. A 2014 Purdue University study3 evaluated the effectiveness of three FDD approaches and found that all three produced false alarms greater than 25% of the time and misdiagnoses in more than 30% of cases.

False alarms lead technicians to take action when none is necessary, wasting resources and potentially leading to negative impacts on system performance. Misdiagnosis can lead a technician to take the wrong action in trying to correct the fault.

- The use of refrigeration gauges requires tapping into an otherwise sealed system. Each time gauges are placed on the equipment, there is a risk of contamination and a small amount of refrigerant loss.

Even small amounts of contamination can cause a major loss in efficiency. Lab studies4 show that only 0.3% nitrogen (representing air in the system) reduces efficiency by 18% for non-TXV systems and 13% for TXV systems.

There’s no question that proper charge is critical for system performance, reliability, and efficiency. However, the evidence is overwhelmingly against the notion that running around doing charge-adjustments in a mass-market utility program produces cost-effective energy savings.

In the next installment of this two-part series we’ll look at how charge diagnostics and adjustment can be responsibly incorporated into the Performance-Based Contractor’s arsenal of skills as part of a systematic approach to improving performance.

Ben Lipscomb is a registered Professional Engineer with over 14 years of experience in the HVAC industry including laboratory and field research, Design/Build contracting, and utility energy efficiency program design. He is National Comfort Institute’s engineering manager, and may be contacted at benl@ncihvac.com.

.

References

2 Mowris et al, 2004, Field Measurements of Air Conditioners with and without TXVs, ncilink.com/aceee

3Yuill et al, 2014, Evaluating Fault Detection and Diagnostic Tools with Simulations of Multiple Vapor Compression Systems. ncilink.com/purdue

4Mowris, 2012, Laboratory Measurements and Diagnostics of Residential HVAC Installation and Maintenance Faults. ncilink.com/mowris

Excellent article Ben – I do think that the advent of transducer technology will allow for more accurate refrigerant charge analysis without the refrigerant loss inherent with conventional hoses & gauges. Looking forward to the next article!